Project Goals

This is a summary of the work I committed to my “Latin American Art and Artificial Intelligence” GitHub repository during the Winter of 2022. I redesigned my portfolio recently, so I decided to begin documenting the project I started working on this past winter!

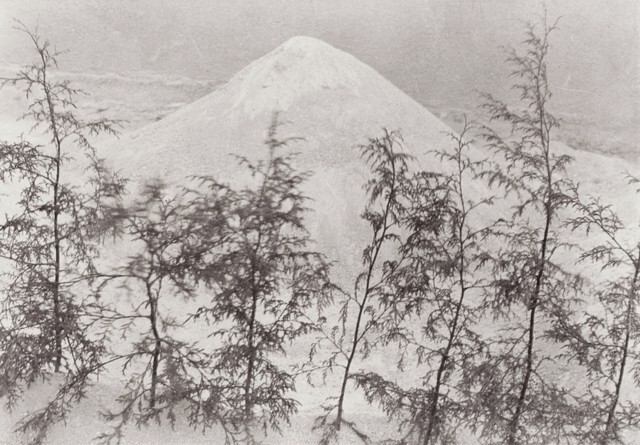

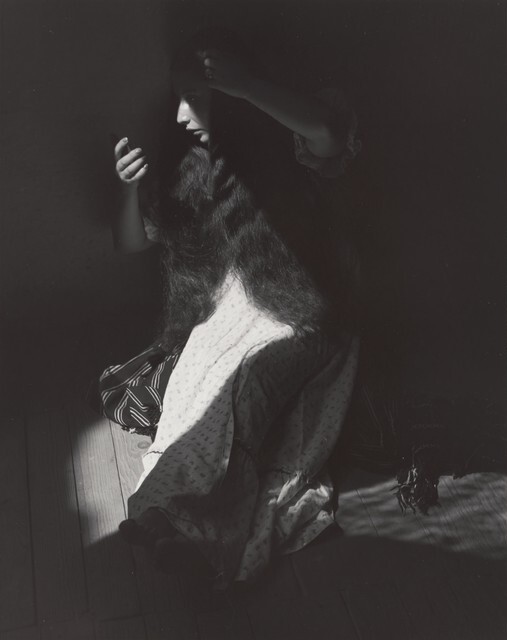

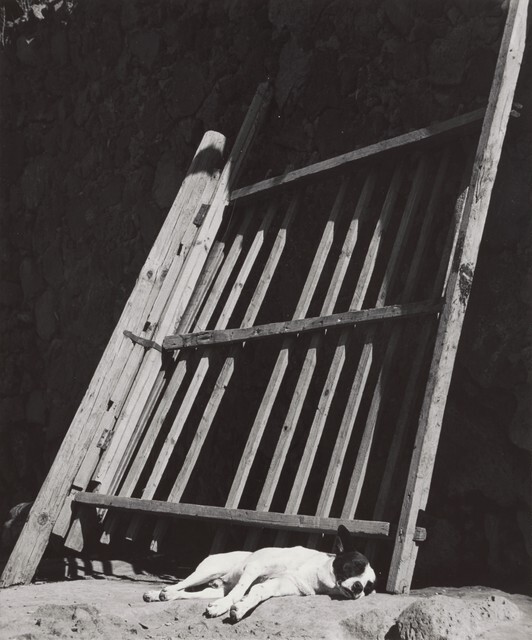

I want to create a state-of-the-art text-to-image model. Primarily, I am going to focus on including more Latin-American images in the project to visualize the style and transfer it to images generated using text prompts.

I am using open-source data from the National Gallery of Art (NGA) Museum’s database which was made publically accessible via an API.

SQL Querying the NGA Database

The first thing I did was find a dataset. The NGA ‘Open Data‘ program’s database contained many useful tables which could be manipulated using SQL.

The database is also really extensive and contains pieces from around the world. I used this database to create SQL queries for Latin American as well as non-Latin American art. I will be going over the process of how I did this later on in this post. A challenge I overcame was using the API to download the images in sufficient resolution. I did this by setting the size argument in the API to full to take advantage of the entire image. However, I expect to manipulate the images locally later on.

The database also has a very easy-to-read data dictionary file included in the repo which made connecting the disparate data sources easier, for example, to produce the full dataset.

Cleaning the Datasets & Feature Engineering

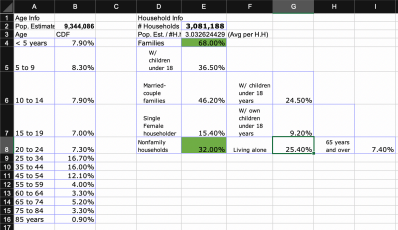

For both datasets, cleaning was done to prepare the data for input into an M.L. algorithm. For example, text was processed into tokens, categorical variables were made into their one-hot representations, and statistical information was introduced. This was also a time I used to do basic exploratory data analysis on the composition of the dataset – for example, the percentage of artworks included in the dataset by nationality, artist, or medium.

Downloading Latin American Images

Initially, I downloaded the Latin American Images before the non-Latin American Images because it was a MUCH smaller dataset; L.A dataset only contained ~400 rows. However, the non-L.A dataset contained ~300,000!

The actual number of images in the dataset according to the NGA is ~130,000. The dataset has duplicates due to the nature of the SQL queries I used. There may be multiple entries per image due to each image having been listed to the artist or to the sponsor who was listed as having donated the art to the museum. It could also be due to multiple artists being connected to the individual image. I will be making a separate post about this issue later on!

Downloading the smaller dataset first was helpful in understanding how to store and download the images efficiently. It also helped me fine-tune the algorithms I had created to clean the datasets on the smaller table before running it on the larger one. It would also help me test a script to transfer the images into the directory structure I was planning. Doing so with a smaller table would mean having much less delay if something were to go wrong and I had to go back and fix it.

Building the Directory Structure

I wanted to create a structure similar to the popular art genre detection dataset – ArtBench. Doing so would help train a PyTorch model more efficiently. To do this, I had to create the folder LatinAmerican-2-imagefolder-split as the root. Then, inside the root, I made two subdirectories, one named ‘test’ and the other ‘train’. In both the train and test subdirectories, I added images that would be used to train/test the image model. Since I only had ~350 unique images to work with, an 80/20 split meant I only have around ~280 images to train the model. This is definitely very little, however, that is why we can use non-Latin American images as well. In the future, the L.A dataset could still be used for ‘style transfer’/’style interpolation’ by increasing its size.

After creating the test/train subfolders and moving the images into the directory, I decided to do the same with non-L.A. images, but in a different directory.

Sampling non-L.A. Images

I sampled around ~1200 images of non-L.A. art. I would recommend sampling without replacement to avoid having duplicate rows and thus, multiple images being overwritten. It would also shrink the size of the dataset and reduce efficiency. I made the mistake of downloading from a sample that was made with replacement and will make a post about how I identified and unduplicated the unique images in a future post. The gist of the solution was that I renamed the images to include the objectID as well, which was able to identify the images because every unique image URL was tied to its metadata. This did not have the effect of removing all the duplicates, however, since even though each URL-ObjectID pair is unique, multiple rows include them for each sponsor or artist in the dataset. This means you can have multiple images of the same image, and the filenames would have the same objectID, but vary by artist or sponsor.

Downloading non-Latin American Images

After considering the images with similar names, I was able to download ~98% of the sample images.

This is all the work I’ve done in 2022.

I will follow up with a literature review and discuss models I tested and hope to create in 2023!